bround #

pyspark.sql.functions.bround(col, scale=0) #

version: since 2.0.0

Round the given value to scale decimal places using HALF_EVEN rounding mode if scale >= 0 or at integral part when scale < 0.

Scale: Decimal places

Runnable Code:

from pyspark.sql import functions as F

# Set up dataframe

data = [{"a": 1.85,"b": 2},{"a": 1.86},{"b": 5}]#,{}]

df = spark.createDataFrame(data)

df = df.drop("b")

# Use function

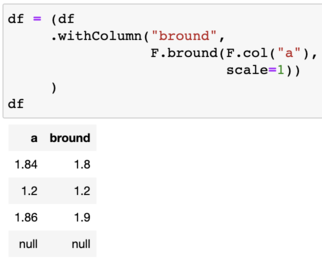

df = (df

.withColumn("bround",

F.bround(F.col("a"),

scale=1))

)

df.show()

| a | bround |

|---|---|

| 1.85 | 1.8 |

| 1.86 | 1.9 |

| null | null |

Usage:

I normally just use the standard round function which does what I would normally expect. I’m not sure exactly what the difference is except this uses HALF_EVEN instead of HALF_UP. See the java docs on specifics for this:

returns: Column(sc._jvm.functions.bround(_to_java_column(col), scale))

tags: round, round up, round down, decimal places

© 2023 PySpark Is Rad