array_join #

pyspark.sql.functions.array_join(col, delimiter, null_replacement=None) #

version: since 2.4.0

Concatenates the elements of column using the delimiter. Null values are replaced with null_replacement if set, otherwise they are ignored.

delimeter: string that goes between elements

null_replacement: string instead of None for null

Runnable Code:

from pyspark.sql import functions as F

# Set up dataframe

data = [{"a":1,"b":2,"c":2},{"a":3,"c":5}]

df = spark.createDataFrame(data)

df = df.select(F.array(F.col("a"),F.col("b"),F.col("c")).alias("a"))

# Use function

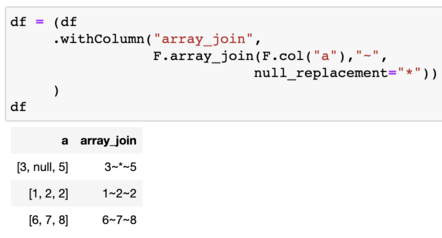

df = (df

.withColumn("array_join",

F.array_join(F.col("a"),"~","*"))

)

df.show()

| a | array_join |

|---|---|

| [1, 2, 2] | 1~2~2 |

| [3, null, 5] | 3~*~5 |

Usage:

Simple array function.

returns: Column(sc.\_jvm.functions.array_join(\_to_java_column(col), delimiter, null_replacement))

tags: join array, concatenate array, join list elements

© 2023 PySpark Is Rad