assert_true #

pyspark.sql.functions.assert_true(col, errMsg=None) #

version: since 3.1.0

Returns null if the input column is true; throws an exception with the provided error message otherwise.

Runnable Code:

from pyspark.sql import functions as F

# Set up dataframe

data = [{"a": 1,"b": 2}]

df = spark.createDataFrame(data)

# Use function

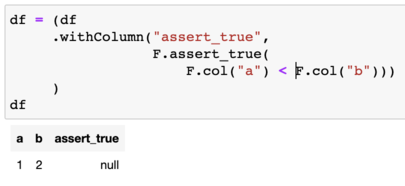

f = (df

.withColumn("assert_true",

F.assert_true(

F.col("a") < F.col("b")))

)

df.show()

| a | b | assert_true |

|---|---|---|

| 1 | 2 | null |

Usage:

Never used it. But I could see it being useful for some form of validation. The fact that it throws an error message is a strange way to use PySpark. Would you have to create a column to throw the message?

returns: Column(sc.\_jvm.functions.assert_true(\_to_java_column(col), errMsg))

tags: check two columns, validation

© 2023 PySpark Is Rad