array_position #

pyspark.sql.functions.array_position(col, value) #

version: since 2.4.0

Collection function: Locates the position of the first occurrence of the given value in the given array. Returns null if either of the arguments are null. Returns 0 if value not found.

Note that the return value is the cardinal position, not zero based.

value: string or number

Runnable Code:

from pyspark.sql import functions as F

# Set up dataframe

data = [{"a":1,"b":2,"c":2},{"a":3,"c":5}]

df = spark.createDataFrame(data)

df = df.select(F.array(F.col("a"),F.col("b"),F.col("c")).alias("a")

# Use function

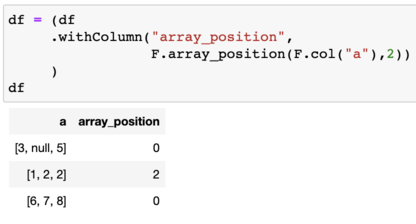

df = (df

.withColumn("array_position",

F.array_position(F.col("a"),3))

)

df.show()

| a | array_position |

|---|---|

| [1, 2, 2] | 0 |

| [3, null, 5] | 1 |

Usage:

Simple array function.

returns: Column(sc.\_jvm.functions.array_position(\_to_java_column(col), value))

tags: position in array, where in array, position in list

© 2023 PySpark Is Rad