array_except #

pyspark.sql.functions.**array_except(col1, col2) #

version: since 2.4.0

Collection function: returns an array of the elements in col1 but not in col2, without duplicates.

Runnable Code:

from pyspark.sql import functions as F

# Set up dataframe

data = [{"a": [1,2,2],"b": [3,2,2]},{"b": [1,2,2]},{"a": [4,5,5],"b": [1,2,2]},{"a": [4,5,5]}]

df = spark.createDataFrame(data)

# Use function

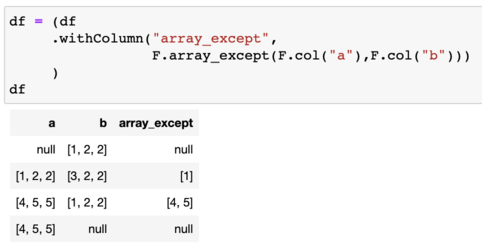

df = (df

.withColumn("array_except",

F.array_except(F.col("a"),F.col("b")))

)

df.show()

| a | b | array_except |

|---|---|---|

| [1, 2, 2] | [3, 2, 2] | [1] |

| null | [1, 2, 2] | null |

| [4, 5, 5] | [1, 2, 2] | [4, 5] |

| [4, 5, 5] | null | null |

Usage:

Simple array function.

returns: Column(sc.\_jvm.functions.array_except(\_to_java_column(col1), \_to_java_column(col2)))

tags: not in array, not in list, list, left join

© 2023 PySpark Is Rad

title: “abs” draft: true

draft: true [docs]def array_except(col1, col2): """ Collection function: returns an array of the elements in col1 but not in col2, without duplicates.

.. versionadded:: 2.4.0

Parameters

----------

col1 : :class:`~pyspark.sql.Column` or str

name of column containing array

col2 : :class:`~pyspark.sql.Column` or str

name of column containing array

Examples

--------

>>> from pyspark.sql import Row

>>> df = spark.createDataFrame([Row(c1=["b", "a", "c"], c2=["c", "d", "a", "f"])])

>>> df.select(array_except(df.c1, df.c2)).collect()

[Row(array_except(c1, c2)=['b'])]

"""

sc = SparkContext._active_spark_context

return Column(sc._jvm.functions.array_except(_to_java_column(col1), _to_java_column(col2)))